With all due respect, I believe my elected representatives as well as my fellow Australians misunderstand the issue of Internet censorship. Below I offer my perspective, which I hope can re-position the debate with a more complete understanding of the issues.

Background

The policy of the Australian Labor Party on its Internet filter was in reaction to the Howard Government’s family-based approach which Labor said was a failure. Then leader of the Opposition, Kim Beazley, announced in March 2006 (Internet archive ) that under Labor “all Internet Service Providers will be required to offer a filtered ‘clean feed’ Internet service to all households, and to schools and other public internet points accessible by kids.” The same press release states “Through an opt-out system, adults who still want to view currently legal content would advise their Internet Service Provider (ISP) that they want to opt out of the “clean feed”, and would then face the same regulations which currently apply.”

The 2007 Federal election, which was led by Kevin Rudd, announced the election pledge that “a Rudd Labor Government will require ISPs to offer a ‚Äòclean feed‚Äô Internet service to all homes, schools and public Internet points accessible by children, such as public libraries. Labor‚Äôs ISP policy will prevent Australian children from accessing any content that has been identified as prohibited by ACMA, including sites such as those containing child pornography and X-rated material.”

Following the election, the Minister for Broadband, Communications and Digital Economy Senator Stephen Conroy in December 2007 clarified that anyone wanting uncensored access to the Internet will have to opt-out of the service .

In October 2008, the policy had another subtle yet dramatic shift. When examined by a Senate Estimates committee, Senator Conroy stated that “we are looking at two tiers – mandatory of illegal material and an option for families to get a clean feed service if they wish.” Further, Conroy mentioned “We would be enforcing the existing laws. If investigated material is found to be prohibited content then ACMA may order it to be taken down if it is hosted in Australia. They are the existing laws at the moment.”

The interpretation of this, which has motivated this paper as well as sparked outrage by Australians nation-wide, is that all Internet connection points in Australia will be subjected to the filter, with only the option to opt-out of the Family tier but not the tier that classifies ‘illegal material’. While the term “mandatory” has been used as part of the policy in the past, it has always been used in the context of making it mandatory for ISP’s to offer such as service. It was never used in the context of it being mandatory for Australians on the Internet, to use it.

Not only is this a departure from the Rudd government’s election pledge, but there is little evidence to suggest that it is truly being representative of the requests from the Australian community. Senator Conroy has shown evidence of the previous NetAlert policy by the previous government falling far below expectations. According to Conroy, 1.4 million families were expected to download the filter, but many less actually did . The estimated end usage according to Conroy is just 30,000 – despite a $22 million advertising campaign. The attempt by this government to pursue this policy therefore, is for its own ideological or political benefit . The Australian people never gave the mandate nor is there evidence to indicate majority support to pursue this agenda. Further, the government trials to date have shown the technology to be ineffective.

On the 27th of October, some 9,000 people had signed a petition to deny support of a government filter. At the time of writing this letter on the 2 November, this has now climbed to 13,655 people. The government’s moves are being closely watched by the community and activities are being planned to respond to the government should this policy continue in its current direction.

I write this to describe the impact such a policy will have if it goes ahead, to educate the government and the public.

Impacts on Australia

Context

The approach of the government to filtering is one dimensional and does not take into account the converged world of the Internet. The Internet has – and will continue to – transform our world. It has become a utility, to form the backbone of our economy and communications. Fast and wide-spread access to the Internet has been recognised globally as a priority policy for political and business leaders of the world.

The Internet typically allows three broad types of activities. The first is that of facilitating the exchange of goods and services. The Internet has become a means of creating a more efficient marketplace, and is well known to have driven demand in offline selling as well , as it creates better informed consumers to reach richer decision making. On the other hand, online market places can exist with considerable less overhead – creating a more efficient marketplace than in the physical world, enabling stronger niche markets through greater connections between buyers and sellers.

The second activity is that of communications. This has enabled a New Media or Hypermedia of many-to-many communications, with people now having a new way to communicate and propagate information. The core value of the World Wide Web can be realised from its founding purpose: created by CERN , it was meant to be a hypertext implementation that would allow better knowledge sharing of its global network of scientists. It was such a transformative thing, that the role of the media has forever changed. For example, newspapers that thrived as businesses in the Industrial Age, now face challenges to their business models, as younger generations are preferring to access their information over Internet services which objectively is a more effective way to do so .

A third activity is that of utility. This is a growing area of the Internet, where it is creating new industries and better ways of doings, now that we have a global community of people connected to share information. The traditional software industry is being changed into a service model where instead of paying a license, companies offer an annual subscription to use the software via the browser as platform (as opposed to a PC’s Window’s installation as the platform). Cloud computing is a trend pioneered by Google, and now an area of innovation by other major Internet companies like Amazon and Microsoft, that will allow people to have their data portable and accessible anywhere in the world. These are disruptive trends, that will further embed the Internet into our world.

The Internet will be unnecessarily restricted

All three of the broad activities described above, will be affected by a filter.

The impact on Markets with analysis-based filters, is that it will likely block access to sites due to a description used in selling items. Suggestions by Senators have been that hardcore and fetish pornography be blocked – content that may be illegal for minors to view, but certainly not illegal for consenting adults. For example, legitimate businesses that used the web as their shopfront (such as adultshop.com.au), will be restricted from the general population in their pursuit of recreational activities. The filter’s restriction on information for Australians is thus a restriction on trade and will impact individuals and their freedoms in their personal lives.

The impact on communications is large. The Internet has created a new form of media called “social media”. Weblogs, wiki’s, micro-blogging services like Twitter, forums like Australian start-up business Tangler and other forms of social media are likely to have their content – and thus service – restricted. The free commentary of individuals on these services, will lead to a censoring and a restriction in the ability to use the services. “User generated content” is considered a central tenet in the proliferation of web2.0, yet the application of industrial area controls on the content businesses now runs into a clash with people’s public speech as the two concepts that were previously distinct in that era, have now merged.

Further more, legitimate information services will be blocked with analysis-based filtering due to language that would trigger filtering. As noted in the

ACMA report , “the filters performed significantly better when blocking pornography and other adult content but performed less well when blocking other types of content”. As a case in point, a site containing the word “breast”, would be filtered despite it having legitimate value in providing breast cancer awareness.

Utility services could be adversely affected. The increasing trend of computing ‘in the cloud’ means that our computing infrastructure will require an efficient and open Internet. A filter will do nothing but disrupt this, with little ability to achieve the policy goal of preventing illegal material. As consumers and businesses move to the cloud, critical functions will be relied on, and any threat in the distribution and under-realisation of potential speeds, will be a burden on the economy.

Common to all three classes above, is the degradation of speeds and access. The ACMA report claims that all six filters tested scored an 88% effectiveness rate in terms of blocking the content that the government was hoping would be blocked. It also claims that over-blocking of acceptable content was 8% for all filters tested, with network degradation not nearly as big of a problem during these tests as it was during previous previous trials, when performance degradation ranged from 75-98%. In this latest test, the ACMA said degradation was down, but still varied widely—from a low of just 2% for one product to a high of 87% for another. The fact that there is a degradation of even 0.1% is in my eyes, a major concern.The

Government has recognised with the legislation it bases its regulatory authority from, that “whilst it takes seriously its responsibility to provide an effective regime to address the publication of illegal and offensive material online, it wishes to ensure that regulation does not place onerous or unjustifiable burdens on industry and inhibit the development of the online economy.”

The compliance costs alone will hinder the online economy. ISP’s will need to constantly maintain the latest filtering technologies, businesses will need to monitor user generated content to ensure their web services are not automatically filtered and administrative delays to unblock legal sites will hurt profitability and for some start-up businesses may even kill them.

And that’s just for compliance, lets not forget the actual impact on users. As Crikey has reported (Internet filters a success, if success = failure ), even the best filter has a false-positive rate of 3% under ideal lab conditions. Mark Newton (the network engineer who Senator Conroy’s office attacked recently ) reckons that for a medium-sized ISP that‚Äôs 3000 incorrect blocks every second . Another maths-heavy analysis says that every time that filter blocks something there‚Äôs an 80% chance it was wrong.

The Policy goal will not be met & will be costly through this approach

The Labor party’s election policy document states that Labor‚Äôs ISP policy will prevent Australian children from accessing any content that has been identified as prohibited by ACMA, including sites such as those containing child pornography and X-rated material. Other than being a useful propaganda device, to my knowledge children and people generally don’t actively seek child pornography, and a filter does nothing to prevent these offline real-world social networks of paedophiles to restrict their activities.

What the government seems to misunderstand, is that a filter regime will prove inadequate in achieving any of this, due to the reality of how information gets distributed on the Internet.

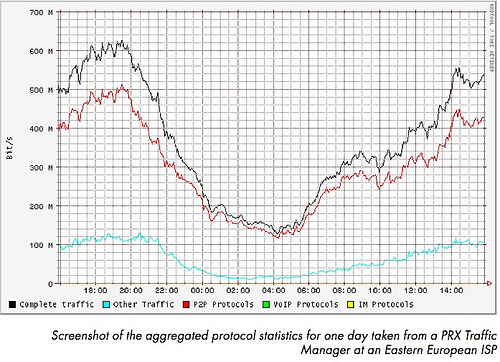

Source: http://www.ipoque.com/userfiles/file/internet_study_2007.pdf

Peer-to-peer networks (P2P), a legal technology that also proves itself impossible to control or filter, accounts for the majority of Internet traffic, with figures of between

48% in the Middle East and 80% in Eastern Europe . As noted earlier, the ACMA trials have confirmed that although they can block P2P, they cannot actually analyse the content as being illegal. This is because P2P technologies like torrents are completely decentralised. Individual torrents cannot be identified, and along with encryption technologies, make this type of content impossible to filter or identify what it is.

However, whether blocked or filtered, this is ignoring the fact that access can be bypassed by individuals who wish to do so.

Tor is a network of virtual tunnels, used by people under authoritarian governments in the world – you can install the free software on a USB stick to have it working immediately. It is a sophisticated technology that allows people to bypass restrictions. More significantly, I wish to highlight that some Tor servers have been used for illegal purposes, including child pornography and p2p sharing of copyrighted files using the bit torrent protocol. In September 2006,

German authorities seized data center equipment running Tor software during a child pornography crackdown, although the TOR network managed to

reassemble itself with no impact to its network . This technology is but one of many available options for people to overcome a ISP-level filter.

For a filtering approach to be appropriate, it will require not just automated analysis based technology, but human effort to maintain the censorship of the content. An expatriate Australian in China claims that a

staff of 30,000 are employed by the Golden Shield Project (the official name for the Great Firewall) to select what to block along with whatever algorithm they use to automatically block sites. With legitimate online activities being blocked through automated software, it will require a beefed up ACMA to handle support from the public to investigate and unblock websites that are legitimate. Given the amount of false positives proven in the ACMA trials, this is not to be taken likely, and could cost hundreds of millions of dollars in direct taxpayers money and billions in opportunity cost for the online economy.

Inappropriate government regulation

The governments approach to regulating the Internet has been one dimensional, by regarding content online with the same type that was produced by the mass media in the Industrial Era. The Information Age recognises content not as a one-to-many broadcast, but individuals communicating. Applying these previous-era provisions is actually a restraint beyond traditional publishing.

Regulation of the Internet is provided under the Broadcasting Services Amendment (Online Services) Act 1999 (Commonwealth) . Schedule Five and seven of the amendment claim the goal is to:

- Provide a means of addressing complaints about certain Internet content

- Restrict access to certain Internet content that is likely to cause offense to a reasonable adult

- Protect children from exposure to Internet content that is unsuitable for them

Mandatory restricting access can disrupt freedom of expression under Article 19 of the International Covenant on Civil and Political Rights and disrupt fair trade of services under the Trade Practices Act.

It is wrong for the government to take the view of mandating restricted access, but instead should allow consumers that option to participate in a system that protects them. To allow a government to interpret what a “reasonable adult” would think is too subjective for it to be appropriate that a faceless authority regulates, over the ability for an individual adult to determine for themselves.

The Internet is not just content in the communications sense, but also in the market and utility sense. Restricting access to services, which may be done inappropriately due to proven weaknesses in filtering technology, would result in

- reduced consumer information about goods and services. Consumers will have less information due to sites incorrectly blocked

- violation of the WTO’s cardinal principles – the “national treatment” principle , which requires that imported goods and services be treated the same as those produced locally.

- preventing or hindering competition under the interpretation of section 4G of the Trade Practices Act . This means online businesses will be disadvantaged from physical world shops, even if they create more accountability by allowing consumer discussion on forums that may trigger the filter due to consumers freedom of expression.

Solution: an opt-in ISP filter that is optional for Australians

Senator Conroy’s crusade in the name of child pornography is not the issue. The issue, in addition to the points raised above, is that mandatory restricting access to information, is by nature a political process. If the Australian Family Association writes an article criticising homosexuals , is this grounds to have the content illegal to access and communicate as it incites discrimination ? Perhaps the Catholic Church should have its website banned because of their stance on homosexuality?

If the Liberals win the next election because the Rudd government was voted out due to pushing ahead with this filtering policy, and the Coalition repeat recent history by controlling both houses of government – what will stop them from banning access to the Labor party’s website?

Of course, these examples sound far fetched but they also sounded far fetched in another vibrant democracy called the Weimar Republic . What I wish to highlight is that pushing ahead with this approach to regulating the Internet is a dangerous precedent that cannot be downplayed. Australians should have the ability to access the Internet with government warnings and guidance on content that may cause offence to the reasonable person. The government should also persecute people creating and distributing information like child pornography that universally is agreed by society as a bad thing. But to mandate restricted access to information on the Internet, based on expensive imperfect technology that can be routed around, is a Brave New World that will not be tolerated by the broader electorate once they realise their individual freedoms are being restricted.

This system of ISP filtering should not be mandatory for all Australians to use. Neither should it be an opt-out system by default. Individuals should have the right to opt-into a system like this, if there are children using the Internet connection or a household wishes to censor their Internet experience. To mandatory force all Australians to experience the Internet only if under Government sanction, is a mistake of the highest levels. It technologically cannot be assured, and it poses a genuine threat to our democracy.

If the Ministry under Senator Conroy does not understand my concerns by responding with a template answer six months later , and clearly showing inadequate industry consultation despite my request, perhaps Chairman Rudd can step in. I recognise with the looming financial recession, we need to look for ways to prop up our export markets. However developing in-house expertise at restricting the population that would set precedent to the rest of the Western world, is something that’s funny in a nervous type of laughter kind of way.

Like many others in the industry, I wish to help the government to develop a solution that protects children. But ultimately, I hope our elected representatives can understand the importance of this potential policy. I also hope they are aware anger exists in the governments’ actions to date, and whilst democracy can be slow to act, when it hits, it hits hard.

Kind regards,

Elias Bizannes

—-

Elias Bizannes works for a professional services firm and is a Chartered Accountant. He is a champion of the Australian Internet industry through the Silicon Beach Australia community and also currently serves as Vice-Chair of the DataPortability Project. The opinions of this letter reflect his own as an individual (and not his employer) with perspective developed in consultation with the Australian industry.