The DataPortability Project has successfully promoted in 2008 the concept of “data portability”. However it’s become too successful – people make announcements now that claim to be “data portability” but are misleadingly not. Further, the term “Open” has become the new black. But really, when people say they are open – are they?

Status update on the DataPortability Project & context

The DataPortability Project now has developed a strong underlying transparent governance model to make decisions which embeds a process to achieve outcomes. We have also formulated our vision that forms the core DNA of the Project and allow us to align our efforts. Organisationally, we are currently working on a legal entity to protect our online community, and we are doing this whilst also ensuring we are working with others in the industry, such as the discussions we’ve had within the IDTBD proposal with Liberty Alliance, Identity Commons and others.

Our brand communications are nearly finalised (this time, legally vetted), and a refreshed website with a new blog has been rolled out. We’ve put out calls for positions and have already finalised our agreement with a new community manager. (Now open are positions for our analyst roles if you are interested.)

We have a Health Care task force that’s just started, looking to broaden our work into another sector of the economy. We also have an Service Provider Grid Task force finalising its work, which via an online interface and API, will allow people to query what various entities use in terms of open standards. We also have a task force that will provide sample EULA and TOS documents that encourage data portability, and further our vision.

The DataPortability vision states that people should be able to reuse their data. Traditionally in the past, people have said this means “physically” porting their data amongst web services. Whilst this applies in some cases, it is also about access as I recently argued .

So to synchronise our work on the EULA/ToS task force, I believe we need a technology equivalent, and which will give additional value to our Service Provider Grid. This is because Open Standards comply with our vision, and we need to ensure we only support efforts that we believe are worthy.

Hi, I’m open

Open Standards have been a core value that the DataPortability Project has advocated for since its founding, getting to the point where its even been confused as its core mission (it’s not). For us, they are an enabler Рand it has always been in our interest to see all of them work together.

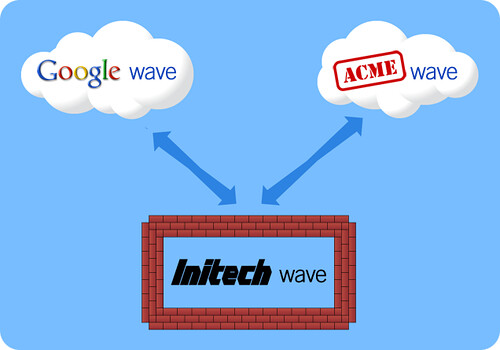

Standards are important because they allow interoperability. For people to be able to access their data from multiple systems, we require systems to be able to easily communicate with each other. Likewise, for people to get value of any data they export from a system, they need to be able to import it – and this can only occur if the data is structured in a way that is compatible with another system.

We advocate “Open” because we want to minimise the costs of business for wanting to comply with our vision. However during 2008, the term "Open" Standards has been over-used, to the point of abuse.

An open standard is a standard that is publicly available and has various rights of use associated with it. But really, what’s open?

– its availability?

– the authority controlling the standard?

– the decision making process over the standard?

Liberty Alliance defines it as:

– The costs for the use of the standard are low.

– The standard has been published.

– The standard is adopted on the basis of an open decision-making procedure.

– The intellectual property rights to the standard are vested in a not-for-profit organisation, which operates a completely free access policy.

– There are no constraints on the re-use of the standard.

That I believe, perfectly encapsulates what I think an Open Standard should be. However as someone who spends his days applying international accounting standards to what companies report in their financials, I can assure you, simply flagging the criteria is only half the fun. Interpreting them is a whole debate in itself.

In my eye, most of these "open" efforts don’t fit that criteria. To illustrate, I am going to shame myself as I am a member of a workgroup that claims to be open: the APML workgroup. The group fails the open test because:

– it has a closed workgroup that makes the decisions, without a clearly defined decision making procedure

– it does not have a non-profit behind it, with the copyright owned by a company (although it’s made clear there is no intention to issue patents)

– it has no clear rights attached to it

So does that mean every standard group needs to create a legal entity for it to be open? Thankfully no – the Open Web Foundation (OWF) will solve this problem. Or does it? Whilst the decision making process is "open" (you can read the mailing list where the discussion occurs), what about the way it selects members? It’s dependent on being invited. That’s Open with a big But.

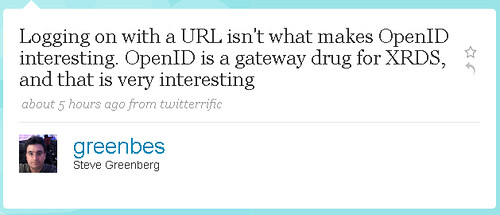

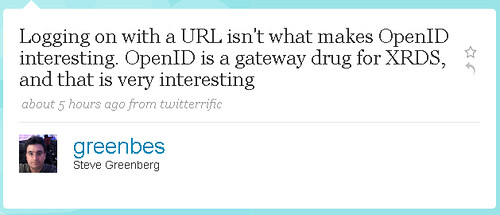

How about OpenID (which I am also a member of) – that poster child for "Open Standards". On the face of it, it fits the bill. But did you know OpenID contains other standards as part of it? As my friend and intellectual mentor Steve Greenberg said:

Now thankfully, XRDS fits the bill as a safe standard. Well kind of. It has links to another standard XRI, which it is alleged are subject to patent claims. Well sort of. Kinda. Oh God, let’s not get into a discussion about this again. But don’t give poor APML, the OWF or Open ID too much grief – I could indeed raise some nastier questions especially at other groups. However this isn’t about shaming – rather, it’s about raising questions.

The standards communities are fraught with politics, they are murky, and now they are creeping into the infrastructure of our online world. As a proponent for these "Open Standards", I think it’s time we start looking at them with a more critical eye. Yes, I recognise all these questions I’m raising are fixable, but that’s why I want to raise the point, because they are currently being swept under the carpet outside of the traditional authorities like the W3C.

It’s time some boundaries were set on what is effectively the brand of Open. It’s also time the term is defined, because quite frankly, its lost all meaning now. I’ve listed some criteria – but what we really need is some consensus on what ‘the’ criteria for Open should be.

Google has announced a new technology that is arguably the boldest invention and most innovative idea to come out in recent years for the Internet (full announcement here).

Google has announced a new technology that is arguably the boldest invention and most innovative idea to come out in recent years for the Internet (full announcement here).