The other week, a good friend of mine through my school and university days, dropped me a note. He asked me that now that he is transitioning from being a professional student to legal guru (he’s the type I’d expect would become a judge of the courts), that I pull down the website that hosts our experiment in digital media from university days. According to him, its become "a bit of an issue because I have two journal articles out, and its been brought to my attention that a search brings up writing of a very mixed tone/quality!".

In what seemed like a different lifetime for me, I ran a university Journalist’s Society and we experimented with media as a concept. One of our successful experiments, was a cheeky weekly digital newsletter, that held the student politicians in our community accountable. Often our commentary was hard-hitting, and for $4 web hosting bills a month and about 10 hours work each, we become a new power on campus influencing actions. It was fun, petty, and a big learning experience for everyone involved, including the poor bastards we massacred with accountability.

Privacy in the electronic age: is there an off button?

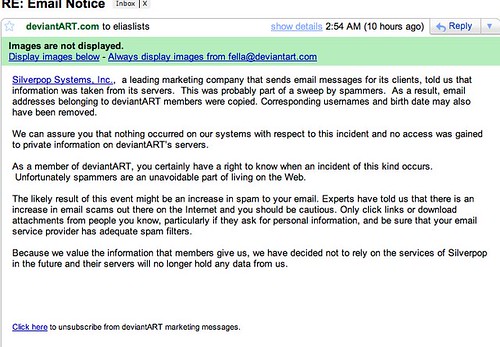

However this touches on all of us as we progress through life, what we thought was funny in a previous time, may now be awkward that we are all grown up. In this digitally enabled world, privacy has come to the forefront as an issue – and we are now suddenly seeing scary consequences of having all of our information available to anyone at anytime.

I’ve read countless articles about this, as I am sure you have. One story I remember is a guy who contributed to a marijuana discussion board in 2000, now struggles with jobs as that drug-taking past of his is the number one search engine result. The digital world, can really suck sometimes.

Why do we care?

This is unique and awkward, because it’s not someone defaming us. It’s not someone taking our speech out of context, and menacingly putting it a way that distorts our words. This is 100% us, stating what we think, with full understanding what the consequences of our actions were. We have no one but ourselves to blame.

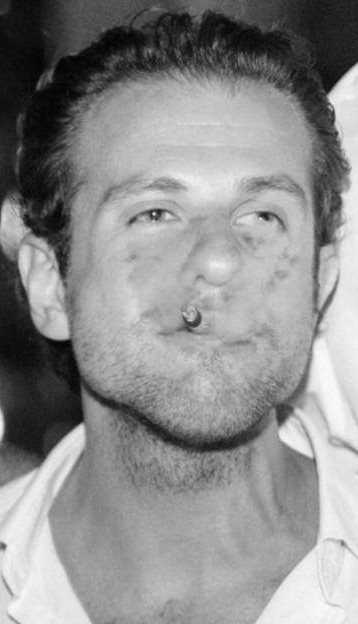

Time changes, even if the picture doesn’t: Partner seeing pictures of you – can be ok. Ex seeing pictures of you – likely not ok.

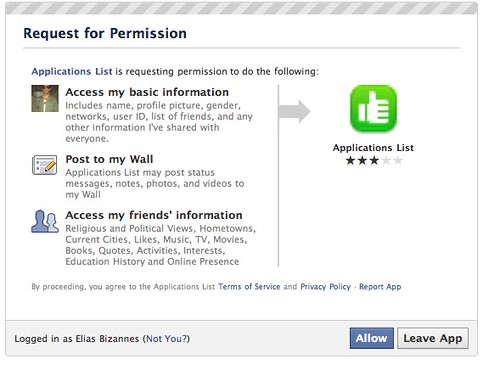

In the context of privacy, is it our right to determine who can see what about us, when we want them to? Is privacy about putting certain information in the "no one else but me" box or is it more dynamic then that – meaning, it varies according to the person consuming the information?

When I was younger, I would meet attractive girls quite a bit older than me, and as soon as I told them my age, they suddenly felt embarrassed. They either left thinking how could they let themselves be attracted to a younger man, treating me like I was suddenly inferior, or they showed a very visible reaction of distress! Actually, quite memorably when I was 20 I told a girl that I was on a date with that I was 22 – and she responded "thank God, because there is nothing more unattractive I find, than a guy that is younger than me". It turned out, fortunately, she had just turned 22. My theory about age just got a massive dose of validation.

Now me sharing this story is that certain information about ourselves can have adverse affects on us (in this case, my sex life!). I normally could not care less about my age, but with girls I would meet when I went out, I did care because it affected their perception of me. Despite nothing changing, the single bit of information about my age would totally change the interaction I had with a girl. Likewise, when we are interacting with people in our lives, the sudden knowledge of a bit of information could adversely affect their perception.

Some doors are best kept shut. Kinky for some; stinky for others

A friend of mine recently admitted to his girlfriend of six months that he’s used drugs before, which had her breakdown crying. This bit of information doesn’t change him in any way; but it shapes her perception about him, and the clash with her perception with the truth, creates an emotional reaction. Contrast this to these two party girls I met in Spain in my nine-months away, who found out I had never tried drugs before at the age of 21. I disappointed them, and in fact, one of them (initially) lost respect for me. These girls and my friends girlfriend, have two different value systems. And that piece of information, generates a completely differing perception – taking drugs can be seen as a "bad person" thing, or a "open minded" person, depending on who you talk to.

As humans, we care about what other people think. It influences our standing in society, our self-confidence, our ability to build rapport with other people. But the issue is, how can you control your image in an environment that is uncontrollable? What I tell one group of people for the sake of building rapport with them, I should also have the ability of ensuring that conversation is not repeated to others, who may not appreciate the information. If I have a fetish for women in red heels which I share with my friends, I should be able to prevent that information from being shared with my boss who loves wearing red heels and might feel a bit awkward the next time I look at her feet.

Any solutions?

Not really. We’re screwed.

Well, not quite. To bring it back to the e-mail exchange I had with my friend, I told him that the historian and technologist in me, couldn’t pull down a website for that reason. After all, there is nothing we should be ashamed about. And whilst he insisted, I made a proposal to him: what about if I could promise that no search engine would include those pages in their index, without having to pull the website down?

He responded with appreciation, as that was what the issue was. Not that he was ashamed of his prior writing, but that he didn’t want academics of today reading his leading edge thinking about the law, to come across his inflammatory criticism of some petty student politicians. He wanted to control his professional image, not erase history. So my solution of adding a robots.txt file was enough to get his desired sense of privacy, without fighting a battle with the uncontrollable.

Who knew, that privacy can be achieved with a text file that has two lines:

User-agent: *

Disallow: /

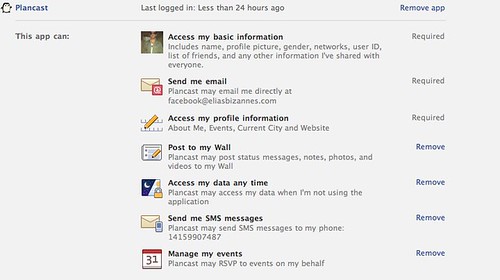

Those two lines are enough to control the search engines, from a beast that ruins our reputation, to a mechanism of enforcing our right to privacy. Open standards across the Internet, enabling us to determine how information is used, is what DataPortability can help us do achieve so we can control our world. The issue of privacy is not dead – we just need some creative applications, once we work out what exactly it is we think we are losing.