In the recent controversy that has erupted due to the firing of Michael Arrington from TechCrunch, I believe it represents an era in innovation led by TechCrunch that we’re only starting to appreciate.

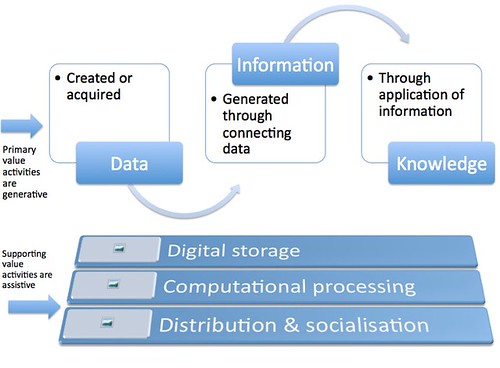

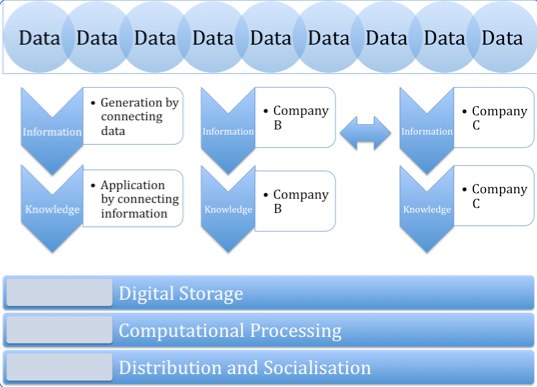

To start on this thought experiment, consider how four years ago (meaning, things haven’t changed) I wrote about the two kinds of content that exist: data like breaking news or archived news; and culture which includes analysis like editorials and entertainment such as satire.

I argue that each content form has unique characteristics that needs to be exploited in different ways. Think about that before digesting this blog post, because understanding the product (such as news) impacts the way the market will operate.

I argue that each content form has unique characteristics that needs to be exploited in different ways. Think about that before digesting this blog post, because understanding the product (such as news) impacts the way the market will operate.

Some trends of the past

Over the last two decades, we’ve seen the form (and costs) of news be disrupted dramatically.

It started with hypertext systems that helped humans share knowledge (with the most successful hyperterxt implementation, the world wide web 20 years ago forever changing the world); search engines helping us find information easier (with Google transforming the world 10 years ago), and content management systems helping people reduce the costs of publishing to practically zero (with Moveable Type and especially WordPress driving this).

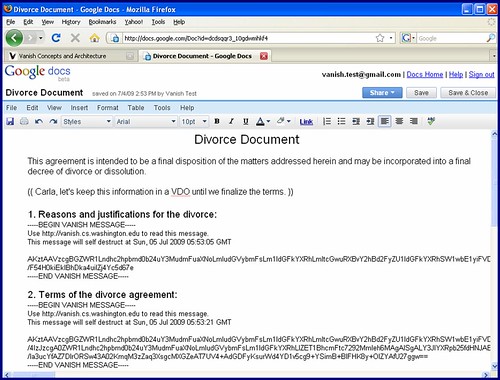

While the sourcing of news still requires unique relationships that journalists can extract to the world, even that’s changed due to social media that’s created a distributed ‘citizen journalism’ world. Related to this is a movement Julian Assange calls “scientific journalism” where the sourcing of news is now democratised and exposed in its raw form.

Some observations of the present

With that, I’ve noticed two interesting things about the tech news ecosystem, who are are helping shape the trends in news more broadly: tech bloggers kill themselves to break stories, to the point where blogs like TechCrunch have become cults for those that work there; separately, the rise of the news aggregators like TechMeme and HackerNews (or Slashdot and Digg before them) have built the audiences who have been overwhelmed by information overload and crave a filter from a quality editorial voice (the latter being why news personalisation technologies cannot work on their own).

The big secret (that’s not particularly secret due to the abundance of ‘share this’ buttons on webpages) about the news ecosystem is that it’s the aggregators who drive traffic to news outlets that report the news. When you understand that point, a lot of other things become clearer.

On the other hand, tech entrepreneurs break their backs for the hope of getting written about on the Tech blogs. The reasons vary from getting credibility so they can recruit talent; exposure so they raise money; and a belief that they can acquire customers (the whole point of building a startup).

Which leads me to think despite all these random observations I’ve listed above, there is a fundamental efficiency evolving in news reporting that may give an insight into the future.

Let’s keep thinking. Other things to consider include:

- The audience starts with the aggregators for news and the articles whereby the better headlines tend to perform better

- News in its barest form is making awareness of an event (data); anything additional is analysis (cultural) which is to shape understanding around the event

- The rise of ‘scientific journalism’ and social media allows society to discover and share information without a third party (due to technology tools).

- Press releases are an invention to communicate a message so reporters can base their writing on, who often just copy and paste the words.

Some thinking about the future

News should be stripped to its barest form: a description of the event. It should be what we consider currently a “headline”, with preferably a link to the source material. Therefore professional journalists, bloggers, and the rest of the world should be competing to break news not on who can write the best prose but who can share a one line summary based on their ability to extract that information (either by being accidentally at the event or having exclusive relationships with the event maker). The cost of breaking the news should be simply a matter of who can share a link the quickest.

Editorial, which is effectively analysis (or entertainment in some cases) and what blogging has become, should be left to what we now consider as “comments”. Readers get to have the “news” coloured, based on a managed curation of the top commentators.

Tying this together: Imagine a world where anyone could submit “news” and anyone could provide “editorial”? A rolling river of news of submitted headlines and links, and discussions roaring underneath the item reflecting the interpretation of the masses.

You could argue Twitter has become the first true example of that where most content is in full public view but with a restricted output (140 characters); people can share links with their comments; and the top stories tend to get retweeted which further gains exposure. Things could be similarly said about Digg, Reddit and Hacker News. But these services, along with Twitter (and Facebook) are simply an insight into a future that’s already begun. I think they are just early pioneers before the real solution comes, similar to how Tim Berners-Lee created a hypertext system in a saturated market that then became the standard; Google created a search engine in a saturated market that then became the standard; and WordPress created a blogging platform in a saturated market that then become the standard. Lots of people have tried to innovate in the news ecosystem, but I still don’t think the nut’s been cracked.

News has a lot of value, but there is different value based on who breaks it and who interprets it. For example, when I fire up some of my favourite aggregators, I tend to not click on the original headline but on brands I like so as to read their take on the event (though when I’m deeply looking into something, I dig for the source material). But the problem with news now, is there is a fundamental disruption on the cost structures supporting it: the economics favour those who break the news, with those that interpret news suffering as traditionally both these roles were considered the one function. Something’s going on and the answer is cheaper production, faster distribution and more of a decentralised effort across society and not the self-appointed curators.

While the newspaper industry is collapsing, something more fundamental is happening with news and we’re simply in the eye of the storm. Stay tuned.